You call a meeting to "align on priorities." Your team spends two hours in a conference room. Decisions get deferred pending "more data." Everyone leaves to update their status decks for next week's follow-up.

You just cost your team 10 hours of productive work. What did they get in return?

If the answer isn't something concrete and valuable, you're net negative. And most managers are.

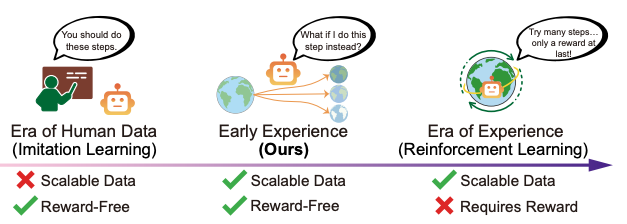

I've been testing a framework inspired by Roger Martin's A New Way to Think. His concept applies to organizational layers, corporate strategy, and entire business units. But the core idea hit me hard: every layer above the front line must add more value than it costs. If it doesn't, it weakens the competitive position.

I apply it to my functioning. One question before every initiative: Does this help my reports win more than it costs them?

What does that look like in practice? Here are the shifts:

The value-add question

Before I schedule a meeting, create a process, or ask for a deliverable, I force myself to answer: Does this help more than it costs?

Time is the cost. Coordination overhead is the cost. Delayed decisions are the cost.

If I can't articulate a specific value that exceeds those costs, I kill it. Even if my peers do it. Even if it's "standard practice."

What your reports actually need

I flipped my 1-on-1s. Instead of collecting status, I ask: "What do you need that I can provide or unblock?"

The shift is fundamental. Your reports aren't your employees—they're your customers. I justify my existence by providing services they can't get more efficiently elsewhere.

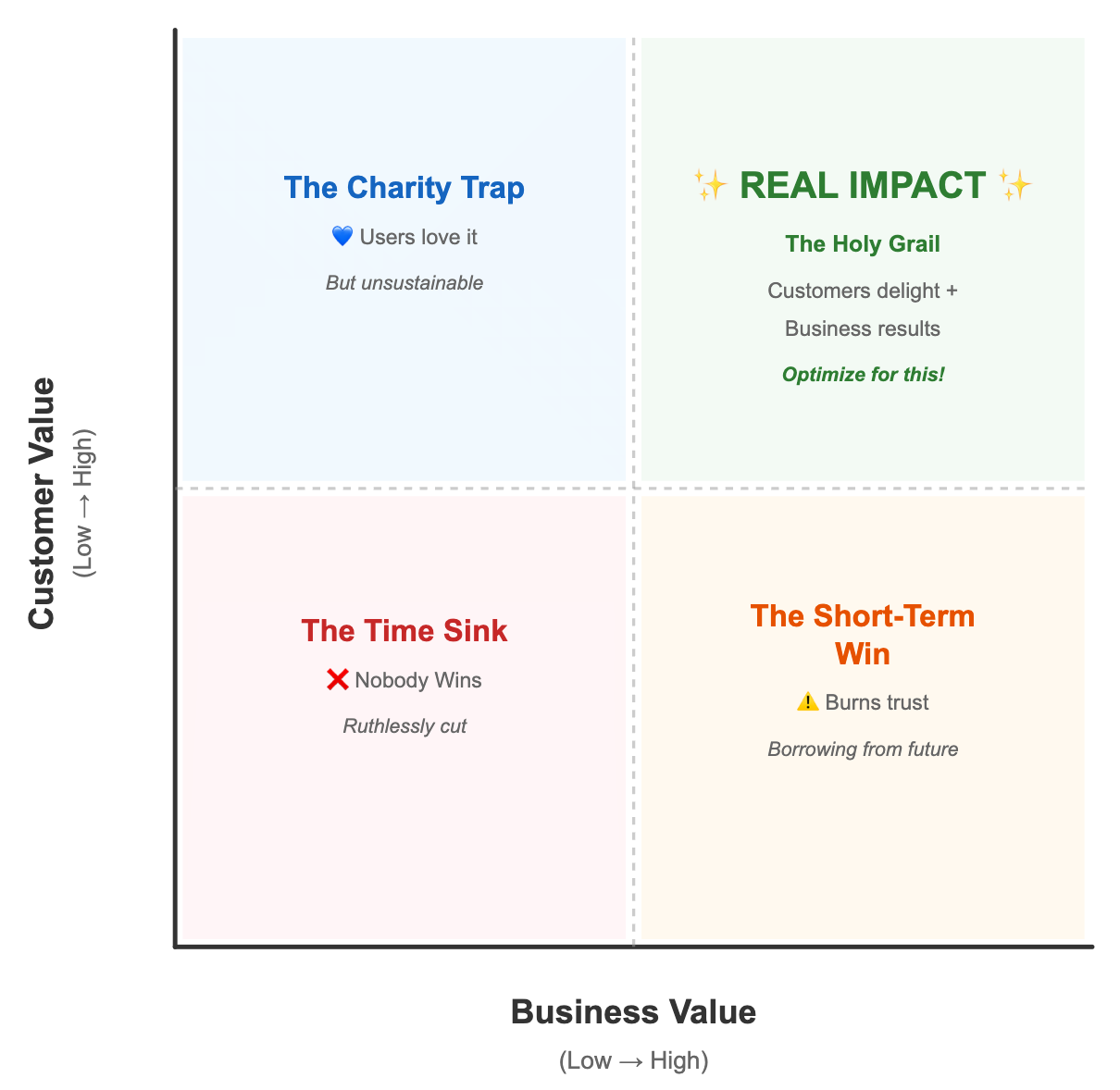

Legitimate services: strategic rigor, negotiating vendor contracts, building executive relationships, creating shared capabilities, and removing obstacles. Roll up my sleeves for prototyping and building as necessary.

Not legitimate: coordination and alignment that doesn't produce outcomes, strategic oversight that doesn't make them more competitive, process standardization that doesn't reduce their work.

Most of what managers do falls in the second category. I'm trying to kill it.

The elimination test

If I disappeared tomorrow, what would break? That's my real value. Everything else is coordination theater.

Stay connected to real competition

I spend time where my team's work actually competes. Customer calls, demos, key artifacts for solution discovery and building. Anywhere customers make choices.

Competition doesn't happen in strategy decks. It happens at the front line, where a customer picks your product over the alternative.

Why this works

Most managers optimize for boss satisfaction, peer coordination, and risk minimization.

If you optimize for your direct reports' competitive advantage instead, you create alpha. Your team ships faster. They win more customers. Morale improves.

You're not changing the org chart or fighting power structures. You're shifting from coordination to capability-building. That shift requires no executive approval. It's a choice.

The organization may not reward it explicitly. But your team's results will compound over time. The performance difference becomes undeniable.

Not prescriptive, just experimental

This is what I'm testing in my own work. It's not the only way to manage. I'm learning as I go, adjusting when things don't work.

Roger Martin's framework goes much deeper than what I've described here. His book is worth reading if you're interested in the broader implications challenging broad spectrum of existing operating models.

For me, the practical takeaway was simpler: I can choose to be net positive or net negative to my team. That choice is mine to make.

So I keep asking the question: Does this help my reports win more than it costs them?

And I keep killing the things that fail the test.