OpenAI’s GPT-realtime Brings a Step Forward in Voice AI

For years, voice AI has felt like a half-step behind its text-based counterpart. The standard architecture relied on a clunky chain: speech-to-text, a language model for reasoning, then text-to-speech. The result was often laggy, robotic, and disconnected from the flow of natural conversation.

OpenAI’s new GPT-realtime changes that dynamic. By unifying speech recognition, reasoning, and speech synthesis into a single model, it eliminates the pauses and disconnects that made past systems frustrating. The model not only hears and responds in real-time, but it also preserves tone and conversational nuance—something no pipeline system could fully achieve.

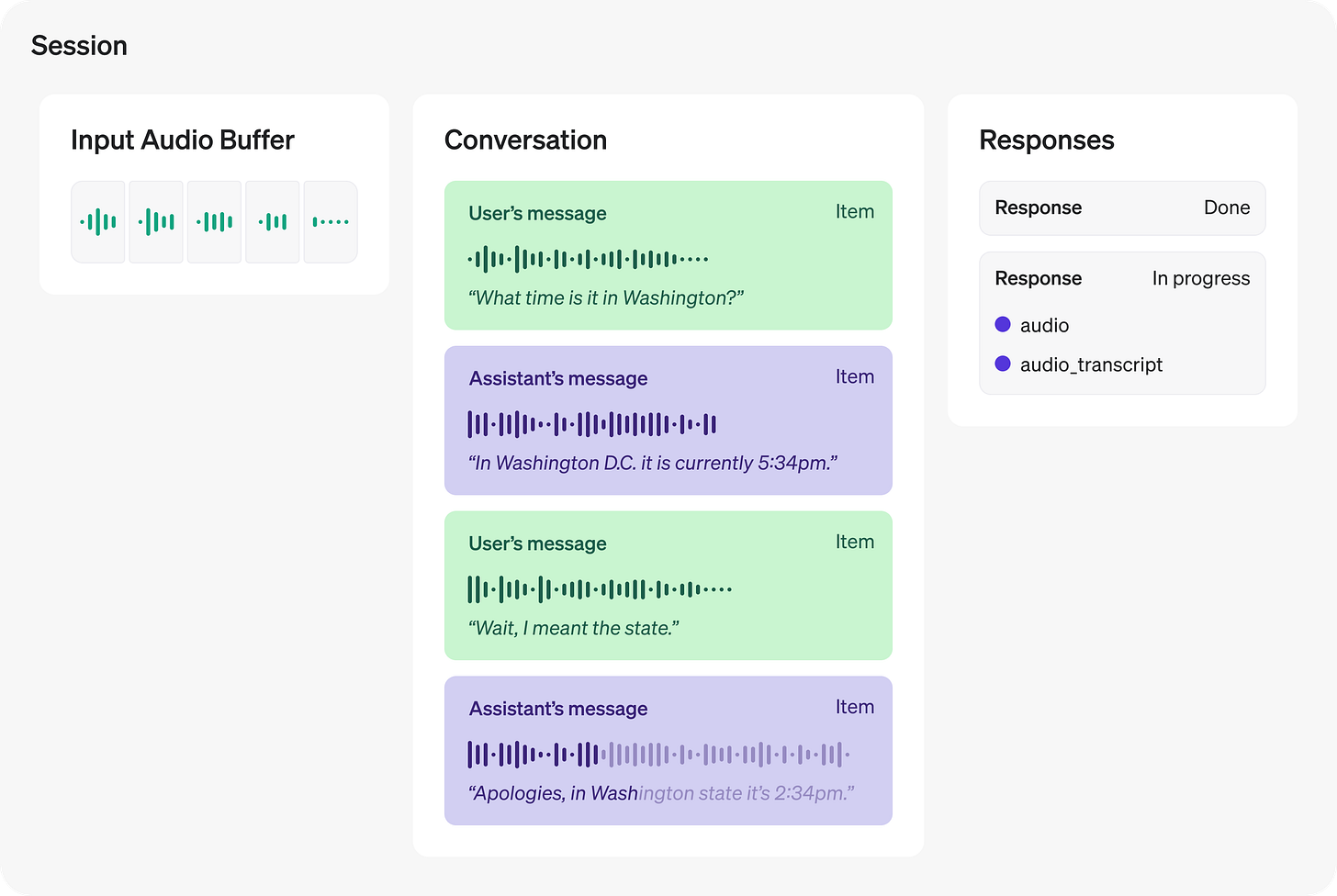

Image: From Realtime Conversations documentation

The Technical Leap

Benchmarks highlight the shift. On Big Bench Audio, a reasoning test, GPT-realtime reached 82.8% accuracy compared with 65.6% for the previous pipeline. On MultiChallenge Audio, which measures instruction following, it scored 30.5% against 20.6%. Even in complex function calling, performance jumped to 66.5% versus 49.7% (Dev.to analysis).

This matters for product teams. Faster response time and higher accuracy mean users can treat voice AI as an actual conversational partner, not a clunky intermediary. Add to this a 20% drop in cost, plus new, natural-sounding voices like Cedar and Marin, and GPT-realtime becomes not just a technical upgrade but a usability breakthrough.

Early Adoption Is Already Underway

The shift is not theoretical. Zillow has begun experimenting with GPT-realtime to make property searches conversational and intuitive. Instead of typing filters, a user can simply ask, “Show me three-bedroom homes near downtown with a fenced yard,” and get results instantly, complete with natural follow-up.

Other use cases are emerging quickly:

Customer support agents that handle calls fluidly, thanks to built-in SIP integration.

Tutoring and educational tools that listen, see (through image input), and respond in real-time.

Healthcare support assistants that guide patients through intake or follow-up conversations with more empathy.

These aren’t edge cases—they are everyday workflows where latency and naturalness make or break the experience.

What It Means for Product Leaders

For product managers and technologists, GPT-realtime offers a rare combination: a step-change in technical capability and clear enterprise relevance. The faster integration path—no more stitching together speech-to-text and text-to-speech pipelines—means teams can experiment more quickly. And because the model can combine voice with vision, entirely new multimodal interfaces are now possible.

The strategic implication is clear. Voice AI is moving from a novelty to a platform-level capability. Companies that start experimenting now will be positioned to set user expectations, not react to them.

Looking Ahead

GPT-realtime marks the moment voice AI feels natural enough to use daily. It’s faster, more accurate, and already in the hands of innovators. For product leaders, the takeaway is simple: treat this not as a future opportunity but as a present one.